Energy Consumption in Data Centers: Air Versus Liquid Cooling

Rising Energy Demand in Data Centers

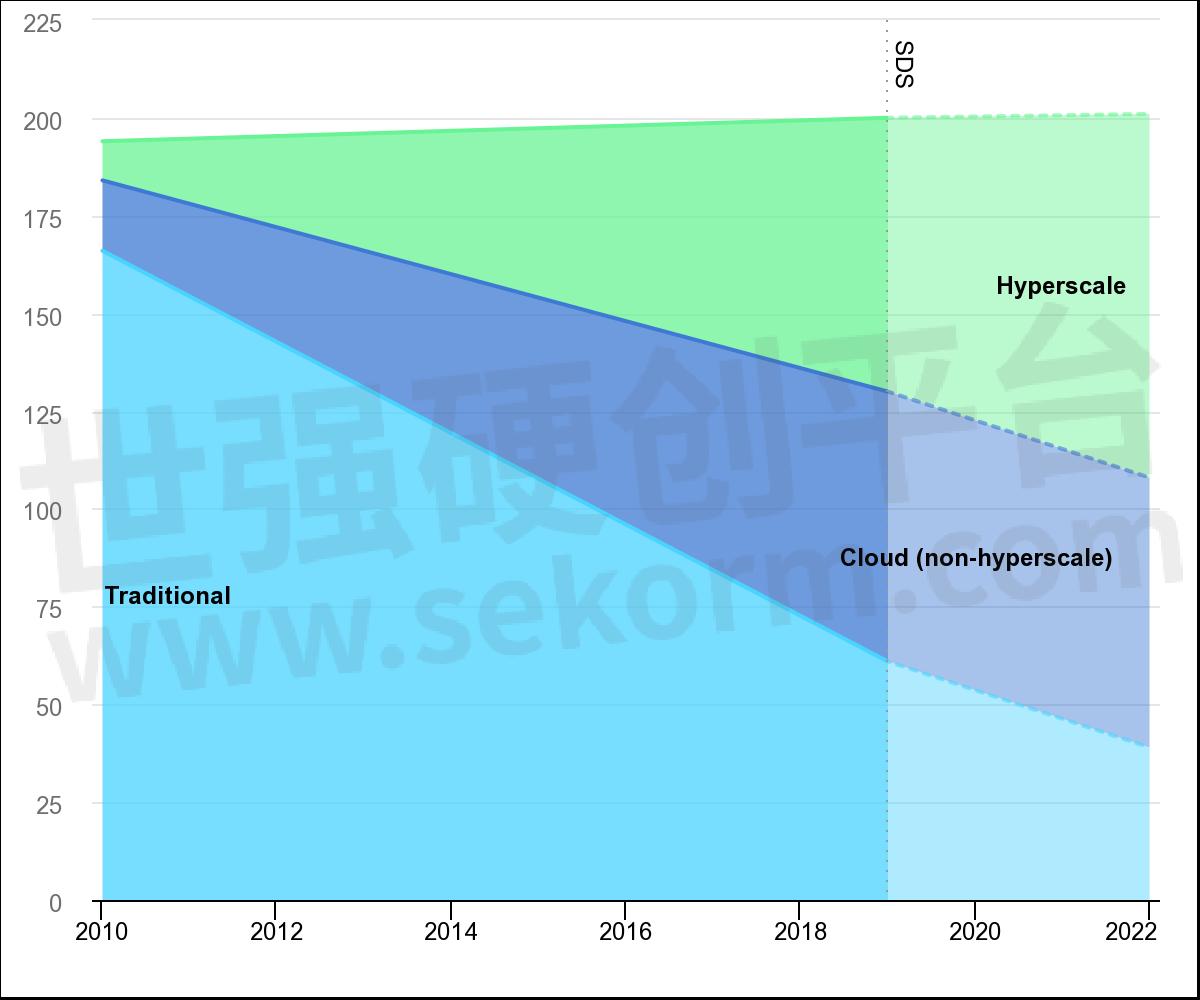

Growing demand for digital services, including cloud computing, artificial intelligence, and other data-intensive technologies is increasing global data center energy consumption. According to the International Energy Agency (IEA), data centers consumed an estimated 200 TWh of electricity in 2022 and are expected to grow to 400 TWh by 2030. As the data center industry continues to evolve and address rising energy consumption challenges, investors have opportunities to support and capitalize on advancements in energy-efficient technologies that innovate and improve sustainability.

Cooling and Energy Consumption in Data Centers

McKinsey and Company estimates that cooling accounts for nearly 40% of the total energy consumed by data centers, emphasizing the importance of implementing efficient cooling practices to reduce energy consumption and improve overall energy efficiency.

40% of data center energy consumption is for cooling

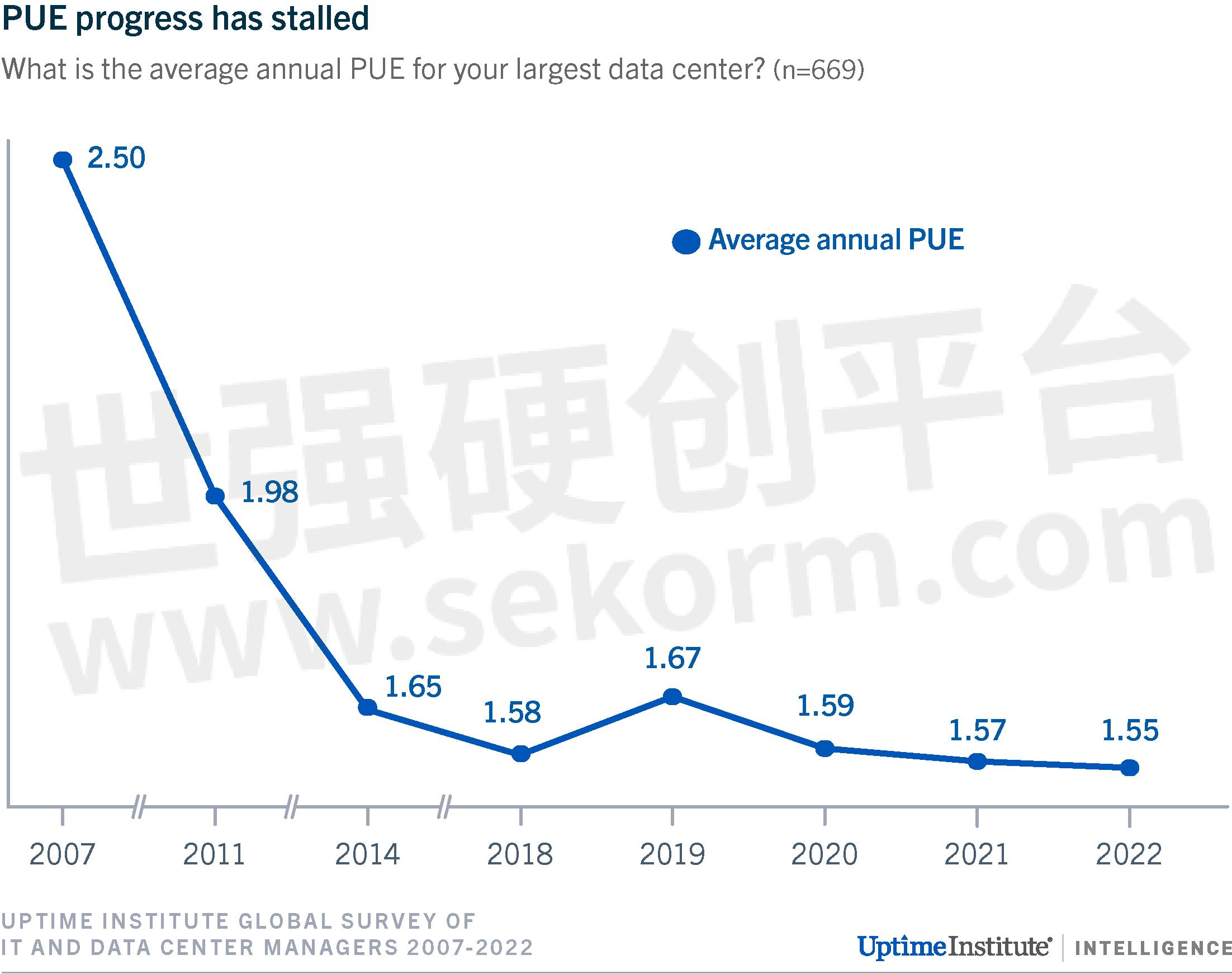

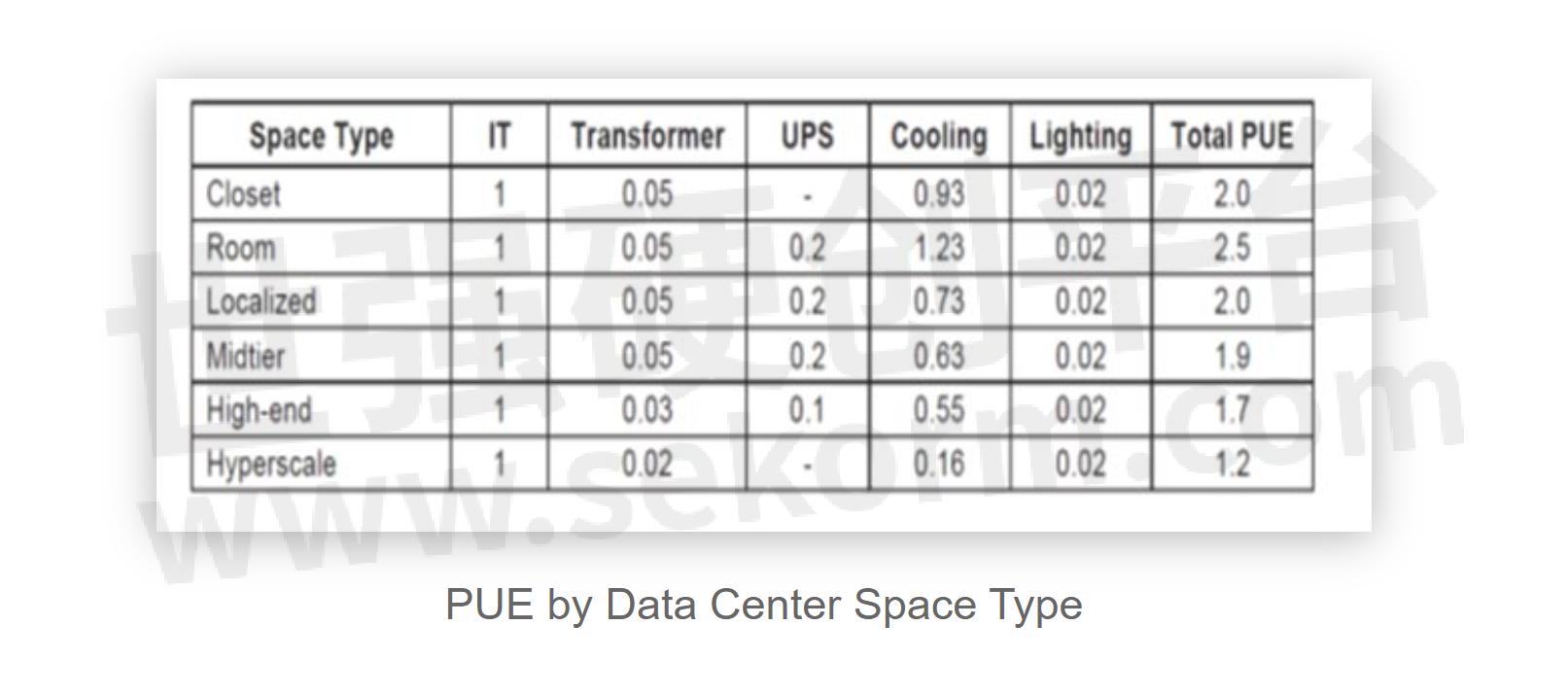

Data centers use a common metric known as Power Usage Effectiveness (PUE) to measure energy efficiency, a ratio that compares the total energy consumed by a data center, to the energy consumed just by the IT equipment.

PUE = Energy consumed by the whole facility / Energy consumed by the IT Equipment

A PUE of 1.0 means that the data center is perfectly efficient, while a PUE of 2.0 means that the facility infrastructure is consuming twice as much power as the IT equipment. Data center operators measure PUE to meet efficiency initiatives and identify areas for improvement. The average annual power usage effectiveness (PUE) reported in 2022 was 1.55, representing a slight improvement over the 2021 average of 1.57 but consistent with the trend of marginal PUE gains Uptime Institute observed annually since 2014.

Data centers aim to reduce PUEs to 1, maximizing compute performance for energy spent, but currently average 1.55

However, the most efficient large hyperscale facilities achieved a PUE value of 1.2 compared to other facilities that have PUE values greater than 1.6, which means for every kW of power used for the IT task, another 600 W is consumed to power the cooling and other infrastructure equipment.

For each kW used in a data center, it takes 200-600W to cool the IT equipment

Improve Energy Efficiency with Innovative Cooling Technologies

Efficient cooling practices play a crucial role in achieving a lower PUE. By implementing innovative cooling technologies, such as liquid cooling, hot and cold aisle containment, or optimized airflow management, data centers reduce the energy consumed by cooling infrastructure, leading to improved energy efficiency. The adoption of liquid cooling in data centers is gaining momentum due to its ability to deliver more efficient and effective cooling than air-cooling, especially high-density IT racks.

Energy-efficient liquid cooling drives down PUE compared to air cooling

The PUE analysis of a High-Density Air-Liquid Hybrid Cooled Data Center published by the American Society of Mechanical Engineers (ASME) studied the gradual transition from 100% air cooling to 25% air –75% liquid cooling. The study observed a decrease in PUE value with the increase in liquid cooling percentage. In the 75% liquid cooling case, 27% lower consumption in facility power and 15.5% lower usage in the whole data center site were obtained.

Even a partial, 75% transition from air to liquid cooling reduces facility power use by 27%

The PUE metric does not consider IT or networking equipment efficiency and provides a benchmark to evaluate efficiency gains over time within a data center facility, not comparing one facility against another. Regardless, it remains the de-facto standard to measure and compare data center energy efficiency. Despite its shortcomings, PUE provides a useful baseline to assess and improve a facility’s infrastructure efficiency.

Liquid cooling is so effective in improving IT equipment performance and reducing the energy required to cool, it obsoletes the industry’s PUE metric

The data center industry continues to work on new metrics to more accurately measure its energy efficiency. TUE is one such metric that considers the efficiency of the IT equipment, the cooling system, and other factors affecting energy consumption in data centers.

TUE = (Energy consumed by the whole facility) / (Energy consumed by the IT Equipment + Cooling Equipment)

BOYD’s Innovative Data Center Cooling Solutions

Boyd has decades of experience and expertise in innovating and manufacturing cooling solutions like coolant distribution units, 3D vapor chambers, liquid loops and cold plates, remote heat pipe assemblies, and chillers for data centers. Leverage Boyd’s liquid cooling and material science heritage to design innovative AI-based solutions optimized for performance, reliability, and energy efficiency.

Boyd’s engineering and material science expertise allows us to design custom cooling solutions for specific data center types. To learn more about Boyd’s thermal management solutions or to discuss your project needs, schedule a consultation with Boyd’s experts.

- |

- +1 赞 0

- 收藏

- 评论 0

本文由洛希转载自BOYD Blogs,原文标题为:Rising Energy Demand in Data Centers,本站所有转载文章系出于传递更多信息之目的,且明确注明来源,不希望被转载的媒体或个人可与我们联系,我们将立即进行删除处理。

相关推荐

3D Vapor Chambers: Revolutionary Heat Dissipation

As the field of AI continues to evolve, there is a growing need for more minor and more powerful semiconductor chips. However, upgrading semiconductor technology while keeping costs low has increased power consumption and heat generation in these chips. This has caused TDP rates to accelerate, making it increasingly challenging to manage heat in high-performance, compact electronics.To address this issue, a 3D Vapor Chamber has been developed, which can handle over 500 watts of power. This technology is ideal for servers, base stations, repeater stations, and data centers.

Thermal Management in Artificial Intelligence

As technology continues to evolve, more companies leverage Artificial Intelligence (AI) to improve existing offerings and generate new solutions to accelerate value creation for their customers.

Overcoming Three Key Challenges to Increasing Data Center Performance

In this article, Keysight shared three Key Challenges to Increasing Data Center Performance, including Increase Channel Capacity, Guarantee Quality & Interoperability, and Reduce Test Time & Cost.

爱美达(AAVID)5G综合热管理解决方案——世强硬创沙龙2019

描述- Thermal Management Critical to Products that Keep the World Running

Kodiak®系列循环冷水机组规格书

描述- Boyd公司的Kodiak®系列循环冷却器提供精确的温度控制(±0.1°C)和低于环境温度的液体冷却。这些冷却器采用压缩机制冷技术,具有600W至11,000W的额定制冷能力。Kodiak®系列包括空气冷却和水冷却版本,并提供多种泵选项,流量从1 GPM到12 GPM不等。该系列产品具备多种功能和安全选项,适用于3D打印机、激光设备、实验室和医疗设备、半导体加工以及一般工业等多种液体冷却应用。

型号- RC045,RC115,RC095,RC009,RC011,RC022

提高冷却效率:冷却器和低温冷却

描述- 本文探讨了液体冷却系统中的当前趋势,重点介绍了冷却器(Chiller)的使用和应用。文章涵盖了冷却器标准、设计指南以及如何利用冷却器提高效率、改善性能和更好地控制高温负载应用中的严格温度。文章还讨论了液体冷却系统在电子化、智能技术和技术依赖不断增长的全球趋势中的重要性,以及冷却器在数据中心、医疗设备等高功率系统中的应用。此外,文章详细介绍了不同类型的冷却器,包括标准、低温度和级联冷却器,以及选择和设计冷却器时需要考虑的关键因素,如热负荷、冷却剂流体、制冷剂选择和成本效益。

热交换器、冷却器和冷却剂分配装置(CDU)

型号- RC045,6000 SERIES,6000,5000,4000,5000 SERIES,ES SERIES,4000 SERIES,ASPEN,ASPEN SERIES,RC011,RC022,RC030

Coolant Distribution Units (CDUs) That Help Lower Data Center Total Cost of Ownership and Maximize Power Density

Boyd’s Coolant Distribution Units (CDUs) help lower data center total cost of ownership. CDUs mean hyperscale compute systems get the exact, optimal cooling performance the system needs, when it needs it. Intelligent controllers manage system performance to spike with peak demand while remaining economical and efficient during non-peak operation and prevent problems before they occur. Blind mate quick disconnects with 360 swivel fittings make servicing systems fast for minimized maintenance downtime.

Boyd’s Chiller Provided Precise Temperature Control for Renewable Energy Storage Solution

As renewable energy generation continues to grow in popularity and Battery Energy Storage Systems become more commonplace across the world, many leading energy storage companies and battery producers rely on Boyd’s extensive thermal expertise to keep them cool and performing optimally.

Kodiak®再循环制冷机数据表

描述- Boyd公司的Kodiak®循环式冷却器提供精确的温度控制(±0.1°C)和环境以下的液体冷却。这些冷却器采用压缩机制冷技术,具有600W至11,000W的额定制冷能力。Kodiak®循环式冷却器分为空气冷却和水冷却两种版本,并提供多种泵选项以满足不同系统需求。该系列产品具备高性能、高可靠性的组件和减震隔离功能,适用于3D打印机、激光设备、实验室和医疗设备、半导体加工以及一般工业等多种液体冷却应用。

型号- RC045,RC115,RC006,RC095,RC009,RC011,RC022,RC030

Designing Coolant Distribution Units (CDU) into Data Center Systems and Maintaining the Proper Operating Temperature

When it comes to designing a CDU into a data center, few things are as important as ensuring sensitive components maintain the proper operating temperature. This artical discusses some of the most important implementation considerations.

Kodiak®循环式冷水机型号RC006、RC009、RC011、RC022、Rc030和Rc045系列G03/H03/J03技术手册

型号- MODELS RC009,RC045,RC006G03BB1MXXX,RC006,SERIES G03,RC009,H03,SERIES H03,G03,J03,SERIES J03,MODELS RC030,MODELS RC045,MODELS RC011,MODELS RC022,RC011,RC022,MODELS RC006,RC030

Nidec Adds New Products including CDUs, Manifold Units and Pumps to its Lineup of Water-cooling Module

In recent years, data centers that support ICT services’ operations are witnessing an increase in thermal load due to the technical advancement of the CPU, the GPU, and the ASIC among other products, making the requirements for cooling components increasingly strict.

高容量再循环冷水机组数据表

描述- 本资料介绍了高容量循环冷却器,适用于需要大量热量移除并要求低于环境温度的稳定冷却的场景。这些冷却器与清洁水、EGW和PGW兼容,提供21 kW或50 kW的制冷能力,具有双电压选项,温度稳定性为±0.5°C或±1.0°C,并提供两年保修。

型号- RC50077G1,RC27969G1

Coolant Distribution Units - Improve Cooling and Performance of Cloud, Enterprise & 5G Applications

OverviewBoyd Corporation has been the leading innovator in Enterprise cooling and engineered material solutions since data centers first started to emerge and evolve. Boyd utilizes these decades of expertise to develop advanced thermal solutions and systems to stay ahead of rapidly rising processing power, thermal density, and heat loads. This includes forward thinking liquid cooling innovation and system components such as Coolant Distribution Units (CDUs). This article covers CDU technology for improved liquid cooling systems and integrated solutions that help OEMs increase compute density i

电子商城

服务

可定制VC的常规厚度范围1.5mm至15mm,最薄可至0.2mm,最大可达400x400mm,功率范围3~2000w。3D VC较常规2D-VC功率提升30~40%。

最小起订量: 1000套 提交需求>

可定制变压器电压最高4.5KV,高频30MHz;支持平面变压器、平板变压器、OBC变压器、DCDC变压器、PLC信号变压器、3D电源、电流变压器、反激变压器、直流直流变压器、车载充电器变压器、门极驱动变压器等产品定制。

最小起订量: 100000 提交需求>

登录 | 立即注册

提交评论