GreenWaves Building Occupancy Management Solution Using the TensorFlow Object Detection API

1 Building occupancy management solution introduction

GREENWAVES has developed a people counting solution for occupancy management in smart building systems, providing real-time insights into how available space is used by employees and customers. The sensor can be used for tasks such as meeting room or cafeteria usage optimization, desk reservations and usage based cleaning.

People counting with infrared sensors offers best-in-class accuracy with total compliance to privacy-related regulations for indoor environments. GAP processors provide a combination of computing ability for AI and low energy operation that enables this type of application.

As part of our development process, we needed to train an optimized neural network with a single shot detector SSD backend. The TensorFlow Object Detection API comes with a number of prepackaged backbone models, but we wanted to design something more optimized for our detection task. We aimed to

● reduce memory

● reduce complexity, and

● reduce power consumption

In this document, we will show how we carried this out and how a custom network design can still leverage all the backend SSD creation offered by the TensorFlow Object Detection API. We hope this will allow you

● become familiar with an object detection API like the one provided by TensorFlow.

● learn how to modify the API with respect to your custom specifications (i.e., model structure).

● learn how to employ the API for custom solutions such as occupancy management.

● learn how to generate optimized code for running your solution on GreenWaves’ GAP processors.

2 Object detection API

Constructing, training, and deploying machine learning models for the localization and identification of multiple objects is a challenging task. To make this easier, we attempted to leverage the TensorFlow Object Detection API, an open source framework for object detection built on top of TensorFlow. The API involves a group of useful object detection methodologies including

● Single Shot MultiBox Detector (SSD)

● CenterNet

● RCNN

● EfficientDet

● ExtremeNet

Please use these links (TensorFlow 2 Detection Model Zoo, TensorFlow 1 Detection Model Zoo) to view a full list of object detection methodologies supported by the API. It is clear that these solutions have different network architecture, training, and optimization strategies. If you are interested in finding out more, you can read this article for more details on different frameworks along with their advantages and disadvantages. Although these frameworks exhibit different characteristics, all employ deep convolutional neural networks (CNNs) to extract high-level features from the input images, called backbone models. In fact, it has become normal practice to employ and adapt the modern state-of-the art CNNs for feature extractor backbones. This can be achieved by removing the final fully connected classification layers from a CNN, leaving a deep neural network that can be used to extract semantic meaning from the input image without changing its spatial structure.

The following are some useful CNN structures that can be used for backbone models of detectors:

● VGGNet

● MobileNet

● ResNet

● GoogleNet

● DenseNet

● Inception

TensorFlow already provides a collection of detection models backboned to pre-trained CNNs on datasets like COCO and ImageNet. These models can be used for out-of-the-box inference if the target categories are already included in these datasets. Otherwise, they can be used to initialize the model when training on new datasets.

In the rest of this article, we will focus on the SSD object detection algorithm and show you how to use the TensorFlow Object Detection API to develop your own detection network.

2.1 Single Shot MultiBox Detector (SSD)

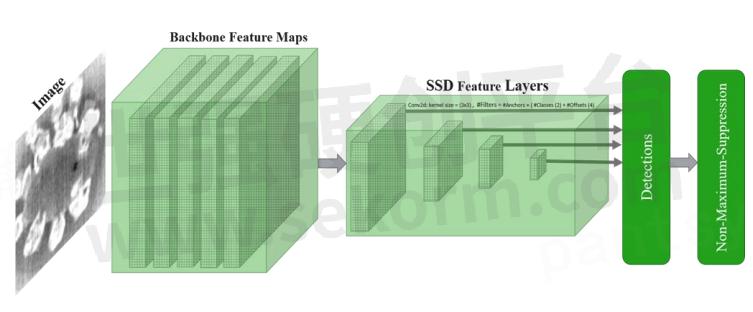

An SSD network has two principal components: a backbone model and an SSD head. As explained earlier, the backbone is typically a CNN model that may be inherited from a state-of-the-art deep model trained on datasets like Imagenet and COCO. The SSD head consists of one or more convolutional layers added to the end of the backbone network where object bounding box classification takes place. The SSD head layers predict the offsets and associated confidence scores to a designed set of default bounding boxes of different scales and aspect ratios (Figure 1).

Figure 1. An SSD model structure that adds several feature layers to the end of a base network that predicts the offsets to default boxes of different scales and aspect ratios and their associated confidences

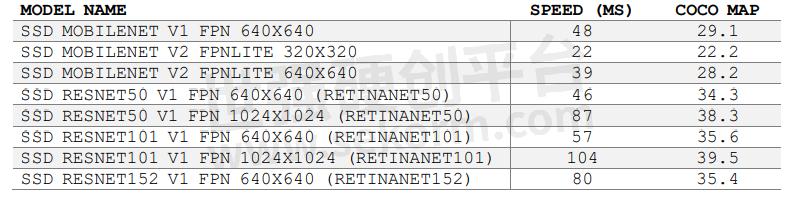

As can be seen in Table 1, TensorFlow provides various SSD heads backboned to a collection of pre-trained models to facilitate the training process.

In the table, the speed refers to the running time in ms per input image, which includes all corresponding preprocessing and post-processing steps. It should be mentioned that the runtime values highly depend on hardware configuration, and these values are produced using a unique computer but are useful as a relative measure of latency.

3 SSD solution deployment using the API

Using pretrained models as an SSD backbone eases the training process but puts constraints on the network structure. To enable efficient inference on the edge, we need to train a custom CNN solution for applications such as infrared human detection that only require a small backbone model with a relatively restricted number of parameters.

In the next section, we will show how you can modify the TensorFlow Object Detection API in order to construct any 4 custom SSD model. If you are already familiar with the theoretical concept of a Single Shot MultiBox Detector, then this section will provide you with a concrete example that will allow you to develop any custom SSD model using the API.

3.1 API model structure

All models under the TensorFlow Object Detection API must implement the DetectionModel interface; for more details, you can take a look at the file defining the generic base class for detection models in the API:

● API: object_detection/core/model.py At a high level, detection models receive input images and predict output tensors.

At training time, output tensors are directly passed to a specified loss function while at evaluation time, they are passed to the post processing function, which converts the raw outputs into actual bounding boxes. The Object Detection API follows this structure. The model you want to train should include the five functions below:

● Preprocess applies any preprocessing operation to the input image tensor. This could include transformations for data augmentation or input normalization.

● Predict produces the model’s raw predictions that are passed to the corresponding loss or post processing functions (e.g., Non-Maximum Suppression).

● Postprocess converts raw prediction tensors into appropriate detection results (e.g., bounding box index and offset, class scores, etc.).

● Loss defines a loss function that calculates scalar loss tensors over the provided ground truth.

● Restore loads checkpoints into the TensorFlow graph.

Depending on whether you are training or evaluating the network, a batch of input images passes through a different sequence of steps, as depicted in Figure 2.

Figure 2.

3.2 API object detection models

To allow the construction of DetectionModels for various object detection methodologies (i.e., SSD, CenterNet, RCNN, etc.), different meta-architectures are implemented by the TensorFlow Object Detection API. The idea behind metaarchitectures is to provide a standard way to create valid DetectionModels for each of the object detection methodologies. All object detection meta-architectures can be found at the following link:

● API: object_detection/meta_architectures

In the case of custom models, you have the option of implementing a complete DetectionModel following a specific metaarchitecture. However, instead of defining a model from scratch, it is possible to create only a feature extractor that can be employed by one of the pre-defined meta-architectures to construct a DetectionModel. It should be emphasized that meta-architectures are classes that define entire families of models using the DetectionModel abstraction.

3.3 The SSD meta-architecture API

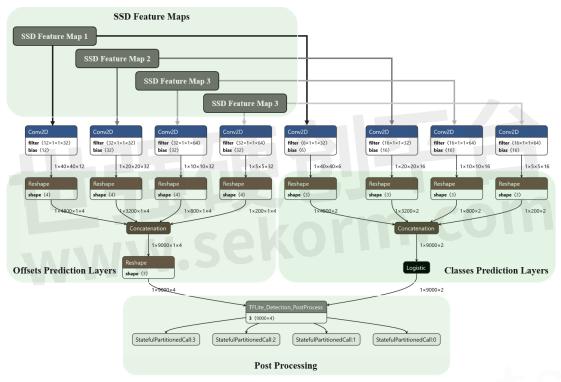

Before describing the stages in the development of a custom SSD model, it is important to establish an understanding of the details of the SSD meta-architecture. As you can see in the example SSD model, there are three principal parts to an SSD model:

● SSD feature maps

● Prediction layers (i.e., classes and offsets)

● Post processing layers

When constructing your model, the Object Detection API uses a model configuration file to automatically create prediction and post processing layers. The configuration contains the anchor generator (e.g., aspect ratios and scales of the default bounding boxes), box predictors (e.g., convolution layer hyper parameters), and post processing (e.g., iou and score thresholds) parameter values. However, SSD feature maps are created by employing pre-constructed feature extractor models. The full list of SSD feature extractor models can be found at

● API: object_detection/models

We can choose an appropriate feature extractor model from the pre-constructed models in the configuration file (i.e., feature_extractor). However, this requires us to know the mappings from model names to their pre-defined structures before changing the configuration file. This mapping can be found at

● API: object_detection/builders/model_builder.py

Also, a number of sample configuration files are provided in the following API:

● API: object_detection/configs/tf2

Figure 3. A typical SSD model constructed by the API

An SSD meta-architecture (SSDMetaArch) requires a feature extractor (SSDFeatureExtractor) to automatically construct appropriate class and offset prediction layers according to the configuration parameters (i.e., number of classes, scales, and aspect ratios). The general framework is summarized in Figure 4.

Figure 4. SSD DetectionModel construction framework

3.4 Custom SSD model design

Now, let’s look at how we can construct a custom SSD model using API meta-architectures. While we can implement a custom SSD detection model from scratch, the previous sections have shown that the construction of a custom model can be achieved via the definition of an appropriate feature extractor model. The API will automatically create prediction and post processing layers using the configuration parameters. We only need to construct an SSD feature extractor through the SSDFeatureExtractor class. The custom feature extractor can be added to the mapping of the feature extractor in

● API: object_detection/builders/model_builder.py

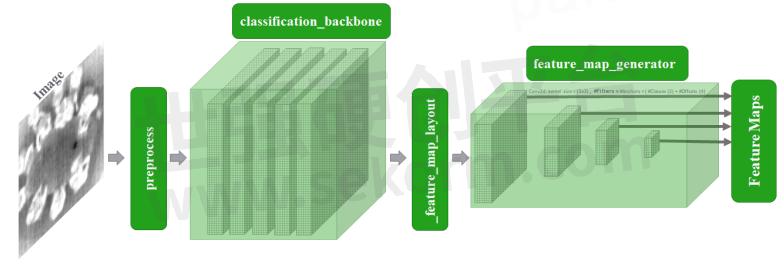

A visual representation of all the elements of the SSDFeatureExtractor class necessary to define a valid SSD feature extractor is shown in Figure 5.

● API: object_detection/meta_architectures/ssd_meta_arch.py

Figure 5. A valid SSD feature extractor structure

In the next section, we explain the principal components of the SSDFeatureExtractor and show how one can construct or adapt a feature extractor for any kind of application.

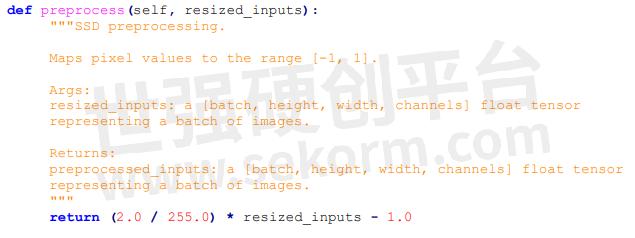

3.4.1 Preprocess

This defines the preprocessing operation that normalizes input images for the classification backbone.

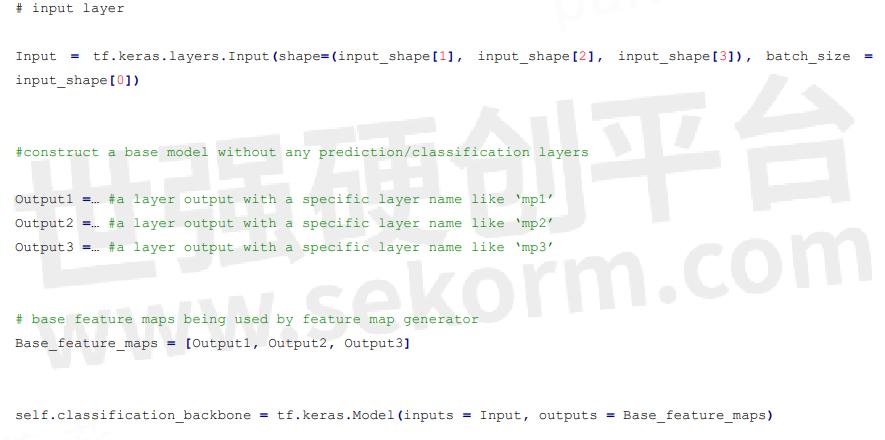

3.4.2 Classification_backbone

The classification backbone is the network structure for the extraction of basic feature maps from the preprocessed inputs.

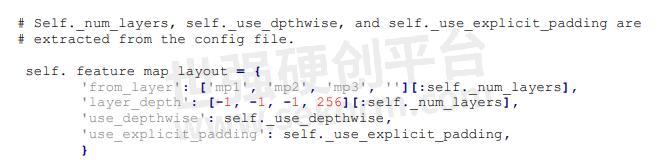

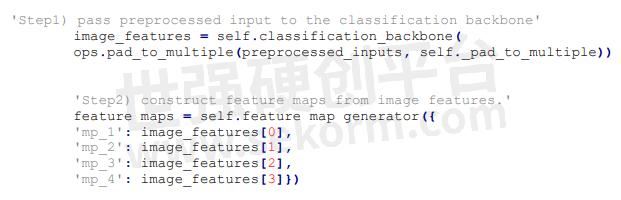

3.4.3 _feature_map_layout

This is a dictionary that determines which basic feature maps are being used to generate SSD feature maps by feature_map_generator.

In this example, the SSD model would have four prediction layers; the first three layers are provided by mp1, mp2, and mp3, and the API will automatically create the fourth (' ') with 256 features. Remember that the API will use your last feature map ('mp3') as input for the fourth one.

It is very important to keep in mind that there should be a correspondence between the layers' names provided in the _feature_map_layout and the backbone_classification model, as the feature map generator takes those layers' outputs as its inputs for the construction of feature maps.

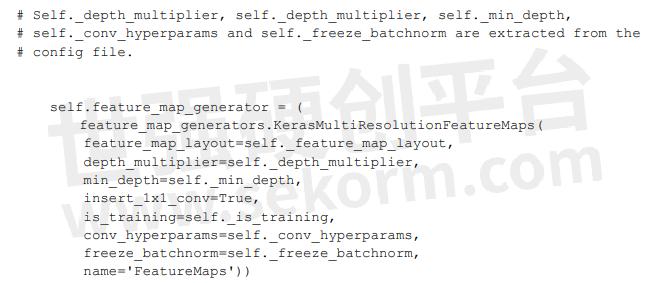

3.4.4 feature_map_generator

This is used to construct SSD feature maps from the features determined in the feature_map_layout.

The feature map generator has a function in its API to create feature maps automatically so there is no need for further coding.

3.4.5 Feature maps

SSD feature maps are created for each input image as shown below:

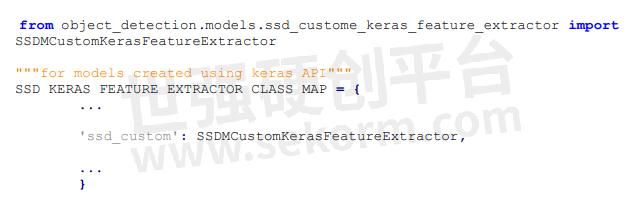

3.5 Custom model embedding

Following the implementation of the instructions in Section 4 and the construction of a custom SSD feature extractor (e.g., ssd_custome_keras_feature_extractor.py), first, we have to ensure that the file has been added to the API models folder at.

● API: object_detection/models

Second, this custom feature extractor can be added to the mapping in which all feature extractor definitions are provided, and we can access them within the pipeline configuration file using their corresponding keys. To do this, open the API model builder at API: object_detection/builders/model_builder.py and add following lines:

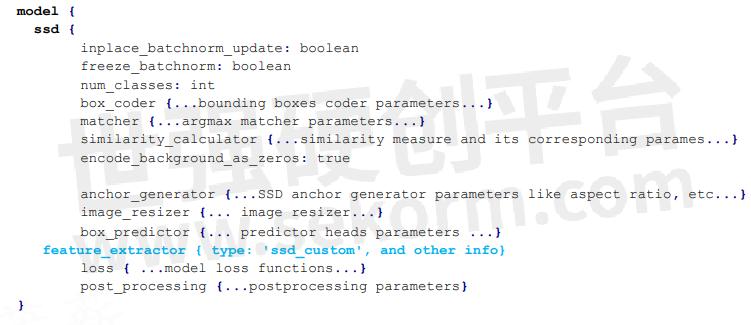

3.6 API installation

After applying all modifications related to the custom model design, follow the normal steps for the installation of the API provided on the TensorFlow website. After the installation of the API, the custom SSD feature extractor can be accessed within the configuration file:

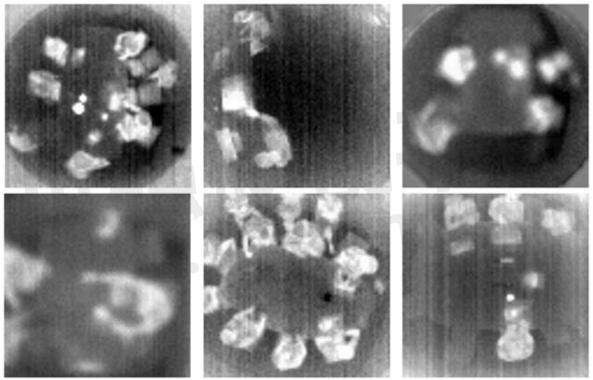

4 Occupancy management data preparation

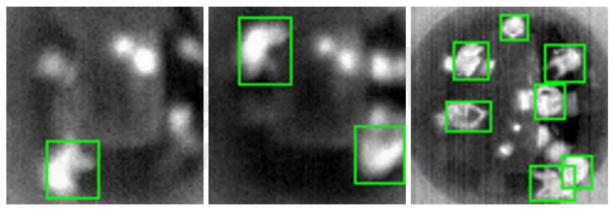

Some infrared images used for training the human detection model are shown in Figure 6. Images are acquired by various sensors installed at different locations and heights. It should be noted that some acquisitions are blurry due to bad focus adjustment after sensor installation. It would be better to adjust focus according to the sensor height, but our model will learn to work around this.

4.1 Annotations

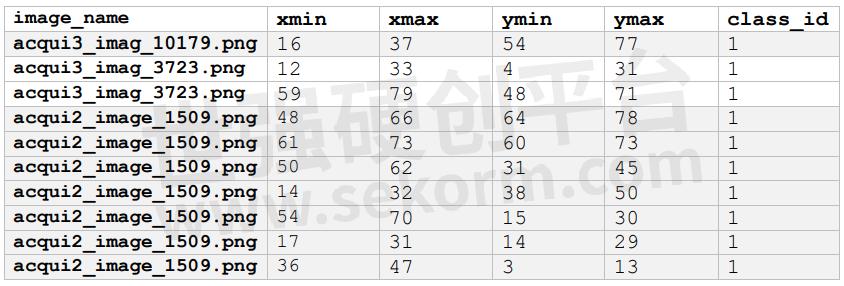

Human annotations are provided in the Pandas DataFrame structure exported to csv files, which include image filenames and their corresponding bounding boxes. An example of this information is provided in the table below.

In Figure 7, for each input image, corresponding ground truth bounding boxes are drawn where humans are present in the frames.

Figure 7. Ground truth bounding boxes are drawn around corresponding images

4.2 API data preparation

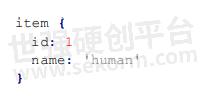

The id of the classes start from 1, and the class id of 0 is reserved for the background context. In the human detection model, the labels map is as follows:

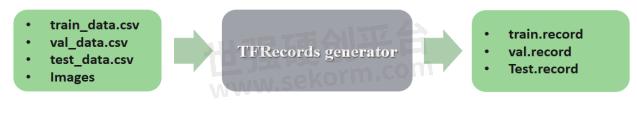

In summary, the corresponding tf_example for each image in the dataset is created and stored in the dataset’s TFRecord file (Figure 8).

Figure 8. TFRecord file generation

5. Summary

In this document, we provided an overview on how to train and optimize a neural network for occupancy management applications, leveraging all the backend SSD offered by the TensorFlow Object Detection API. To enable easy porting of custom NNs on GAP, we have developed GAPflow, a set of tools released by GreenWaves Technologies that allows users to accelerate the deployment of NNs on GAP while ensuring high performance of and low-energy consumption on GAP processors. The GAPflow toolset assists programmers in achieving short time-toprototype of DL-based applications by generating GAP-optimized code based on the provided DL model, and it fully supports the importation of detection models created using the Tensorflow Object Detection API. Watch our tutorial here.

- |

- +1 赞 0

- 收藏

- 评论 0

本文由Ray转载自GREENWAVES Official Website,原文标题为:TensorFlow Object Detection API,本站所有转载文章系出于传递更多信息之目的,且明确注明来源,不希望被转载的媒体或个人可与我们联系,我们将立即进行删除处理。

相关推荐

GAP-8: A RISC-V SoC for AI at the Edge of the IoT

Current ultra-low power smart sensing edge devices, operating for years on small batteries, are limited to low-bandwidth sensors, such as temperature or pressure. Enabling the next generation of edge devices to process data from richer sensors such as image, video, audio, or multi-axial motion/vibration has huge application potential.

应用方案 发布时间 : 2024-08-10

Mitsumi MMR920 Pressure Sensor is Ideal for Water Level Measurement in a Smart Water Bottle Due to Its Small Size, Low-cost, and Liquid Compatibility

Mitsumi MMR920 pressure sensor is ideal for water level measurement in a smart water bottle due to its small size, low-cost, and liquid compatibility.

应用方案 发布时间 : 2024-11-12

Enabling ANC in Open-Ear Earbuds Beyond Apple with GreenWaves GAP9 AI+DSP Processor

By uniquely meeting this sub-millisecond latency requirement, the ultra-low-latency shared memory AI+DSP processor GAP9 enables brands, beyond Apple, to implement active noise cancellation (ANC) in open-ear designs.

应用方案 发布时间 : 2024-10-22

Silicon Labs(芯科科技)产品选型指南

目录- 8-bit Microcontrollers 32-bit Microcontrollers High-performance analog products wireless ICs, SoCs and modules Sensors Interface chip Timing products Isolation products Audio products Video products Voice products Data products Development Tools

型号- C8051T604-GS,SI8420AD-A-IS,WF111,SI7007-A20-IM1,SI8035AA-B-IU,C8051T604-GM,C8051F813-GU,SI8642ET-IS,C8051F541-AM,SL16020DC,C8051F813-GM,C8051F587-AM,C8051F044,SI2434,SI4613,C8051F043,SI4614,C8051F046,C8051F045,C8051F587-AQ,SI2435,C8051F047,C8051F541-AQ,TS3001,TS3002,SI2439,TS3003,SI8712CC-B-IP,TS3004,C8051F060,C8051F531-C-IM,TS3006,C8051F062,SI8710AD-B-IS,C8051F061,SI8712CC-B-IS,C8051F064,C8051F063,EFM32LG230FX - QFN64,EZR32WG230F64R69G,EFM32WG880FX - QFP100,EFM32WG980FX - QFP100,SI8233BB-C-IS1,C8051F531-C-IT,SI51210,SI8635BD-B-IS,C8051F975-A-GM,SI8230BB-B-IS1,SI8232BB-B-IS,C8051F394-A-GM,SI4624,C8051T616-GM,SI4622,SI8635BT-IS,C8051F573-IM,SI4629,C8051F530-C-AM,SI8920BD-IS,C8051F530-C-AT,C8051F801-GM,C8051F711-GQ,C8051F585-IM,SI21802,C8051F585-IQ,EFM32LG390FX - BGA112,SI8605AC-B-IS1,C8051F370-A-GM,EFM8BB10F2G-A-QFN20,C8051F066,C8051F065,C8051F067,SI2457,SI3452B-B02-GM,SI88243ED-IS,C8051F801-GU,C8051F506-AQ,SI7005-B-GM1,SI2167-C,SI21682-C,SI7022-A20-IM,SI8642AB-B-IS,SI8650ED-B-IS,SI2167-B,SI8719BC-A-IP,TSM9118,SI8631AB-B-IS,TSM9117,SI8234AB-C-IS,TSM9119,SI8234AB-C-IM,EFM32WG390FX - BGA112,SI8620AB-B-IS,SI8719BC-A-IS,SI8631EC-B-IS1,C8051F565-AM,EZR32WG330F64R55G,SI8235AB-C-IS1,C8051F835-GS,SI1132,SI3500-A-GM,TS1102-100,C8051F506-AM,C8051F565-AQ,SL2309NZ,SI8065AA-B-IU,EZR32LG230F64R60G,C8051F551-AM,EZR32LG230F128R60G,EFM32LG980FX - QFP100,SI8640AB-B-IS1,SI8600AD-B-IS,SI8710BC-B-IP,SI8710BC-B-IS,C8051F007,EFM32LG290FX - BGA112,SI8712BD-B-IM,EFM32GG295FX - BGA120,C8051F38C-GQ,SI8244BB-C-IS1,C8051F000,C8051F002,C8051F38C-GM,C8051F001,SI8620EC-B-IS,TS1005,TS1004,C8051F006,TS1003,C8051F005,TS1002,TS1001,C8051F963-B-GM,C8051F504-AQ,EFM8SB10F4G-A-QFN20,C8051F823-GS,EZR32LG230F128R61G,C8051F020,EFM32TG822FX - QFP48,SI8650BC-B-IS1,C8051F388-GQ,EFM32G200FX - QFN32,C8051F537-C-AM,C8051F563-IM,C8051F019,EZR32WG330F256R55G,C8051F018,C8051F563-IQ,C8051F011,SI2401,SI4822,C8051F010,EFM32TG222FX - QFP48,EFM32TG210FX - QFN32,SI4820,C8051F012,C8051F015,SI4826,SI2404,SI4827,C8051F017,SI4824,C8051F016,C8051F504-AM,SI4825,EFM32WG232FX - QFP64,C8051T602-GS,C8051T327-GM,C8051T602-GM,BGM111,EZR32WG330F128R68G,C8051F863-C-GS,SI88244ED-IS,C8051F811-GS,C8051F589-AM,SI8421BD-B-IS,C8051F537-C-AT,EFM32GG395FX - BGA120,C8051F531-C-AT,C8051F854-C-GM,C8051F022,C8051F021,SI4831,C8051F023,SI2415,C8051F854-C-GU,SI4835,SI2417,SI4836,EFM32WG290FX - BGA112,SI2169-C,TS1101-100,SI8455BA-B-IU,C8051F040,C8051F042,C8051F041,EZR32WG330F128R69G,SI8232AB-B-IS1,C8051F344-GQ,SI8662EC-B-IS1,SI4840,SI8452AA-B-IS1,C8051F701-GQ,SI4844,C8051F502-IM,C8051F575-IM,SI88622ED-IS,C8051F502-IQ,CP2201,CP2200,EFM32LG942FX - QFP64,SI8641BB-B-IS,EFM32GG942FX - QFP64,SI8655BB-B-IS1,SI8400AB-B-IS,SI8030AA-B-IU,C8051F587-IM,C8051F206,C8051F587-IQ,EFM32TG840FX - QFN64,SI8460AA-B-IS1,C8051F500-IQ,EZR32WG230F64R61G,C8051F546-IM,C8051F500-IM,EZR32WG330F128R67G,SI8660AB-B-IS1,C8051F827-GS,C8051F988-GU,SI52142,SI52143,SI7050,SI52144,SI7055,SI7054,SI7053,C8051F567-AQ,C8051F590-AM,C8051F567-AM,C8051F988-GM,SI8450BA-B-IS1,SI7023-A20-IM1,SL28EB742,SI8231BD-B-IS,C8051F855-C-GU,EZR32WG230F128R63G,EZR32LG230F64R68G,SI8631BC-B-IS1,C8051F300-GM,SI52111,SI52112,SI8920AD-IS,C8051F855-C-GM,SL28EB740,EFM8SB20F16G-A-QFN24,SI8641BB-B-IS1,C8051F300-GS,EFM8UB10F16G-C-QFN28,C8051F920-GQ,C8051F815-GS,C8051F920-GM,C8051F220,EFM8UB10F16G-C-QFN20,TSM9938F,EZR32LG230F64R67G,C8051F221,C8051T320-GQ,SI8651EC-B-IS1,C8051F226,C8051F862-C-GS,TSM9938H,SI8661BB-B-IS1,EZR32WG230F64R63G,SI8661ED-B-IS,SI8220DD-A-IS,EZR32LG230F64R69G,SI8405AB-A-IS1,C8051F565-IM,EFM8SB10F2G-A-QFN20,C8051F395-A-GM,C8051F565-IQ,SI8641BD-B-IS,EFM32TG232FX - QFP64,C8051F231,SI8661BC-B-IS1,C8051F230,C8051T631-GM,SL2305NZ,SI8441AA-D-IS1,SI8630BD-B-IS,C8051T600-GS,C8051F236,SI8442BA-D-IS1,C8051F580-AQ,C8051T600-GM,C8051F803-GS,C8051F580-AM,TS1103-100,EZR32WG230F128R61G,C8051F996-GM,SI21822,SI8645BT-IS,C8051F302-GM,C8051F996-GU,SI8232AB-B-IS,SI8622BC-B-IS,C8051F302-GS,TS7003,C8051F708-GQ,TS7001,EFM32WG890FX - BGA112,EZR32LG230F64R61G,EZR32WG330F128R63G,SI21662-B,SI8600AC-B-IS,SI21662-C,EZR32WG230F128R60G,EFM32G222FX - QFP48,TSM9938T,C8051F523-C-IM,SI21812,EFM32GG990FX - BGA112,EFM32LG360FX - CSP81,SL23EP09NZ,SI8651BD-B-IS,TSM9938W,C8051F510-IM,C8051F556-IM,SI8422AD-B-IS,SI8232AD-B-IS,C8051F411,C8051F410,C8051F413,SI8405AA-A-IS1,C8051F412,C8051F381-GM,SI8040AA-B-IU,C8051F589-IM,C8051F965-B-GM,SI8461BA-B-IS1,SI8655BA-B-IS,SI8660BA-B-IS1,C8051F381-GQ,C8051T621-GM,C8051F346-GM,EZR32WG230F64R68G,EFM8BB10F8G-A-QSOP24,SI51214,C8051F346-GQ,SI51211,SI51218,SI8655BA-B-IU,SI8662BD-B-IS,SI8630BB-B-IS,EFM8UB20F32G-A-QFP32,SI51219,EZR32LG230F64R63G,EZR32WG330F128R60G,C8051F930-GM,SI8630BB-B-IS1,SI8660BB-B-IS1,C8051F502-AM,C8051F825-GS,EFM8UB11F16G-C-QSOP24,C8051F544-IM,EZR32WG330F128R61G,C8051F34A-GQ,EFM32GG332FX - QFP64,SI7006-A20-IM,C8051F502-AQ,SI2165-D,C8051F544-IQ,C8051F986-GU,C8051F34A-GM,SI21832,WF121,EZR32WG230F64R67G,SI8620BT-IS,C8051F569-AM,SI8642BC-B-IS1,C8051F930-GQ,C8051F986-GM,EFM8UB20F32G-A-QFP48,EZR32LG330F64R55G,EFM32LG380FX - QFP100,SI8630

Lynred and GreenWaves collaborate on New Occupancy Management Reference Platform for People Counting Sensor

GreenWaves and Lynred have collaborated on an open-source workspace management platform that allows quick deployment of sensors collecting accurate occupancy data. This platform combines Lynred‘s low-power IR sensors with GreenWaves‘ GAP8 processor to create battery-operated people counting devices, released under open source licenses. The platform ensures occupant anonymity using infrared technology and will be demonstrated at Embedded World in Nuremburg, Germany.

产品 发布时间 : 2024-09-07

WAGO(万可)TOPJOB®S轨装式接线端子选型指南(英文)

描述- In various industrial applications and modern building installations, TOPJOB® S Rail-Mount Terminal Blocks offer more than just reliable electrical connections.The comprehensive line of through terminal blocks and function modules for conductor cross-sections from 0.14 to 25 mm2 (26–4 AWG) offers a variety of advantages.

型号- 285-1171,285-1176,285-1175,285-1178,285-1177,2020-208/134-000,2002-1311/1000-410,2002-1311/1000-411,2016-1306,2016-1307,2016-1304,2016-1305,2016-1302,2016-1303,2016-1301,285-1169,216 SERIES,285-1185,285-1184,285-1187,285-1189,2002-3221/1000-413,2002-2247/099-000,285-1181,2204 SERIES,2106-5304,2206-1201,2206-1207,793-501/000-024,2106-5307,2206-1204,285-1179,2106-5301,2102-1291,282-435/011-000,2102-1292,2020-214/145-000,2020-202,2020-204,2020-203,2020-206,2020-205,2020-208,2020-207,2106-1392,2020-209,2106-1391,2000-5311/1101-951,285-1161,285-1163,2000-1307,285-1165,285-1164,285-1167,2000-1301,2000-1302,2000-1305,2000-1306,2002-3221/1000-434,2000-1303,2000-1304,2022-103/999-953,734-431,2020-211/145-000,734-430,2001-1311/1000-411,2001-1311/1000-410,2002-1674/401-000,2009 SERIES,206-1481,206-1482,2020-1401,2020-1407,2022-108/145-000,2020-1404,2004-1493,2004-1494,2004-1491,2004-1492,2004-1311/1000-401,2004-1311/1000-400,206-1491,206-1492,2020-211,2020-210,2020-213,2020-212,2020-215,2020-214,2022-104/133-000,2000-2209,2000-2204,2000-2203,2000-2202,2020-104/133-000,2000-2201,2000-2208,2000-2207,2000-2247/099-000,2002-1671/401-000,2020-5372/1102-953,2002-2291,785-607,2002-2292,2002-2295,2002-2296,2006-911/1000-542,2006-911/1000-541,785-613,2201-1304,2201-1302,2022-101/142-000,2201-1307,206-1441,206-1442,2202-1711/1000-867,2022-101/142-006,285-169,285-168,2042-321,2201-1301,2020-5372,2022-107/135-000,2002-2228/099-000,206-1451,285-173,285-172,285-170,249 SERIES,2022-107/000-039,2022-107/000-038,285-175,2022-107/000-037,2022-107/000-036,2016-1391,2016-1392,2022-104/000-039/999-953,285-184,2042-351,2201 SERIES,285-181,2000-115,2116-1207,2002-2703,2002-2702,2116-1204,2002-2704,2022-2234/999-953,285-188,2116-1201,285-187,2002-2701,2020-104/000-037,2020-104/000-038,2020-104/000-039,206-1419,2002-2707,2002-2709,2002-2708,2020-104/000-036,2020-112/125-000,793-5501,285-197/999-950,285-195,2042-341,285-194,2000-121,249-116,285-191,2202-1711/1000-836,206-118,249-117,249-118,2020-5391,249-119,2022-113/000-039,2022-113/000-038,2022-113/000-037,285-199,2022-113/000-036,249-120,285-197,258-5030,2042-331,2000-405/011-000,2006 SERIES,2202-6402,2202-6401,206-1400,2020-107/124-000,2202-6404,2202-6403,2202-6406,206-1403,2202-6405,2004-405/011-000,249-105,258-5000,2000-5357/101-000,281-503,2002-191,2002-192,2104-5201,2202-6407,2104-5204,2002-194,2020-210/135-000,2104-5207,206-1415,206-1418,206-1411,2020-110/000-038,206-1412,2020-110/000-039,206-1413,206-1414,2020-110/000-036,2022-106/999-953,2102-5204,2020-110/000-037,2102-5201,249-101,285-137,2002-1911/1000-836,2020-110/135-000,285-135,2004-1411/1000-400,285-134,285-131,2102-5207,206,2016-499,209,2004-1411/1000-401,285-139,2000-557,2002-7111,2000-558,2020-5311/1102-950,2000-559,793-501/000-023,2000-552,2000-553,2000-554,2002-7114,2000-555,2000-556,285-148,2002-1811,285-147,210,793-501/000-017,285-144,285-143,821 SERIES,215,285-141,216,2002-171,2002-172,285-151,2000-560,2022-101/142-016,285-150,793-501/000-012,2104-1292,2104-1291,210-719,285-159,2002-1801,285-157,793-501/000-006,2002-1802,793-501/000-007,210-721,210-722,285-154,793-501/000-005,793-501/000-002,210-720,2002-160,2002-161,2002-1804,2004-911/1000-542,794-5553/000-002,2004-911/1000-541,2202-1981/1000-435,2202-1981/1000-434,2204-1207,2002-1792,215-111,2002-1791,2022 SERIES,2022-2203/999-953,2002-2647,2022-105/134-000,2022-1801/999-953,2002-409/000-006,2002-409/000-005,2204-1201,210 SERIES,2204-1204,2202-1981/1000-449,2002-1781,2200-1404,2104-1392,2200-1407,2104-1391,2200-1401,2020-204/124-000,2002-1305,2002-1306,2002-1307,2002-1308,2002-1301,2002-1302,2002-1303,2002-1304,249,2002-2209/099-000,2020-203/000-037,2020-203/000-038,2020-203/000-039,2022-103/123-000,2020-203/000-036,2020-5417,2202-6301,2202-6303,2202-6302,2202-6305,2202-6304,2202-6307,2202-6306,2002-2662,2002-3211/1000-411,2002-2661,2002-3211/1000-410,2022-115/127-000,821-154,821-155,821-153,2001-433,2001-434,2001-1321/1000-434,258,2002-2667,2001-439,2002-1411/1000-410,2002-1411/1000-411,2001-437,2001-438,2001-435,2006-921/1000-541,2001-436,2006-921/1000-542,2020-207/124-000,2000-5417/1102-950,777-303,2020-108/134-000,2000-2196,2000-2195,2001-440,2002-2657,2016-434,2016-433,2002-1211/1000-411,2002-1211/1000-410,2016-435,2020-107/144-000,2002-2604,2002-2603,2001-410,2202-1911,249-197,2002-2602,2002-2601,2002-2608,2016-403,2002-2607,2016-402,2016-405,2002-2609,2016-404,281,2000-2228/099-000,282,283,284,285,2202-1904,2202-1902,2202-1901,2022-101/122-016,2022-105/000-039/999-953,2003-499,2006-931/1099-541,2202-1907,2006-931/1099-542,2022-111/136-000,2002-1771,2002-1772,2022-101/122-000,2020-213/000-038,2020-213/000-039,2002-1774,2020-213/000-036,2020-213/000-037,2000-2232/099-000,2022-101/122-006,2002-1761,2010-1202,2010-1201,2022-106/123-000,2022-2227/999-953,2002-2611,2010-1204,2002-2612,2010-1205,2001-408,2010-1208,2001-409,2010-1207,2020-5477,2001-406,2001-407,2001-404,2001-405,2001-402,2001-403,2002-2237/099-000,2002-1391,215-511,2022-2231/999-953,2002-1392,2002-139

GAPPoc : A Family of GAP8-centric Proof Of Concept boards for Edge AI

Our GAP8 application processor chip is great at analyzing and understanding data from IoT sensors, from the simplest to the most complex, in a very tight power envelope – from a few tens of milliwatt in active mode down to a few microwatts in sleep mode.

产品 发布时间 : 2024-08-14

GreenWaves Presented at Edge AI Summit in 18-20 November 2020

Greenwaves Technologies was proud to be a sponsor of the Edge AI virtual summit in 18-20 November 2020. Greenwaves presented GAP IoT application processors based on RISC-V architecture and will share knowledge and expertise in Edge Computing and Artificial intelligence.

原厂动态 发布时间 : 2024-08-09

GreenWaves Technologies Produced a Prototype of a New Wireless Modem for the IoT

It is implementing the GreenWaves LPWAN wireless modem dedicated to the high data rate LPWAN applications. This model includes a NUCLEO development board, a XILINX Zynq-7000 FPGA development board and an ATREB215-XPRO evaluation board instantiating an ATMEL AT86RF215 analog front-end.

产品 发布时间 : 2024-08-11

电子商城

品牌:MELEXIS

品类:Integrated Current Sensor IC

价格:¥15.3811

现货: 50,899

品牌:MELEXIS

品类:Triaxis Position Sensor IC

价格:¥22.8370

现货: 46,502

品牌:MELEXIS

品类:Integrated Current Sensor IC

价格:¥14.2165

现货: 25,181

现货市场

品牌:SILICON LABS

品类:Switch Hall Effect Magnetic Position Sensor

价格:¥2.2924

现货:126,000

登录 | 立即注册

提交评论