Which AI models can run at the Very Edge

Artificial Intelligent (AI) has already become pervasive in our everyday life. Nowadays, we are surrounded by numerous intelligent agents that support the human decision process based on real-time data. Typically, our personal data sensed through our own devices, e.g. smartwatches and smartphones, flows to a remote cloud server, where the decision is taken. Hence, the device itself is mostly used for data collection and streaming tasks, which may not be desirable because of privacy and scaling concerns but also energy-efficiency when targeting long battery lifetimes or harvesting solutions.

To address these issues, GREENWAVES Technologies is currently aiming at bringing AI agents close to the sensor sources, at the very edge. This solution brings numerous advantages, including the reduced circulation of personal data, and enables intelligence in challenging environments, e.g. for costs or physical reasons. To support the ambitious goal of smart pervasive sensing, the AI community has recently been investigating novel inference Deep Learning (DL) models optimized in terms of the number of parameters, and not only accuracy. But, still, the complexity is too high to run in real-time on current edge platforms. In fact, when leveraging low-power MCUs for smart sensor applications, the size of deployable inference models is limited both from the low memory footprint and the available computational power. Moreover, the limited energy budget poses additional constraints on the architecture of processing units for running complex DL workload on the extreme edge.

We describe here how Greenwaves Technologies covers this gap to bring complex AI agents onto devices at the very edge.

GAP8: A SoC platform tailored for Edge AI

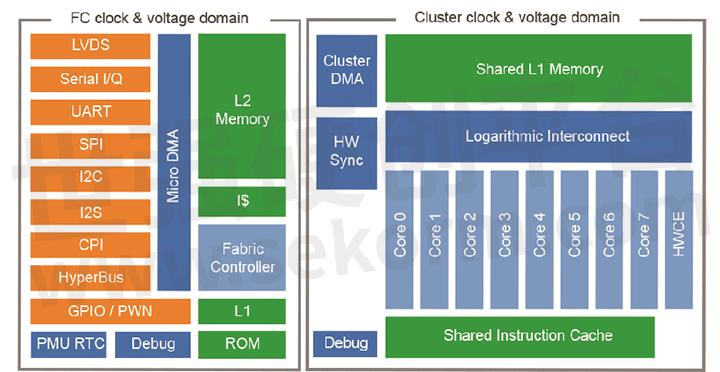

The GAP8 combines several unique features, which match the needs of edge AI applications. Firstly, GAP8 (Figure 1) can be interfaced to a heterogeneous set of external sensors through a smart peripheral sub-system, the Micro-DMA, which autonomously controls the streaming of multiple sensor data. The peripheral region is enriched by a single-core CPU, the Fabric Controller, and a 512kB L2 memory for data buffering. In addition to the Fabric Controller, an 8 core RISC-V parallel compute cluster can be powered-on to accelerate the inference time of AI workloads. The cluster includes a shared instruction cache memory and a 128kB L1 Tightly Coupled Data-Memory (TCDM), working as a scratchpad memory. To boost the energy efficiency, data transfers between L1 and L2 are manually managed through a multi-channel DMA engine. Lastly, the full platform is optimized in terms of power consumption to fit the requirements of battery-powered devices for smart sensing.

If compared to traditional low-power MCUs, GAP8 performs the sensing task with a low-energy budget on the fabric controller side but provides acceleration capabilities for AI tasks thanks to the cluster parallel engine. From a computational viewpoint, deep neural networks are an ‘embarrassingly’ parallel workload that can be efficiently mapped onto the GAP8’s cluster. Along with the HW support, Greenwaves Technologies provides a full SW stack to efficiently map any deep inference model on the GAP8 architecture, as described in the following section.

Figure 1 GAP8 processor Architecture

Deploying a 1000 class Deep Neural Network onto GAP8

To showcase the full process “from a NN framework to GAP8”, this section illustrates the individual building blocks of the Greewaves tool-flow, tailored for the deployment of a deep neural network model on GAP8. To emphasize the GAP8’s capability, we run a MobileNet V1 [1] model trained on a 1000 class image classification problem, which is commonly used also as a backbone network object detection pipelines [2]. The largest model of the MobileNet V1 family, i.e. with an input spatial size of 224×224 and a width multiplier 1.0, features 569 MMAC and 4.2M parameters and reaches a Top1 accuracy of 70.9% on ImageNet. Only by quantizing the parameters to 8 bits, the network can be fully deployed on a GAPUINO system, which couples an 8MB HyperRAM, acting as external L3 memory, together with the GAP8 processor. Details concerning the quantization-aware retraining process are also provided in the next sections.

Basic Computational Kernels

Convolution is the most common operation within a DL workload. Given a convolution layer with an input tensor with

feature channels and a weight tensor

with a receptive field of size

the output tensor with

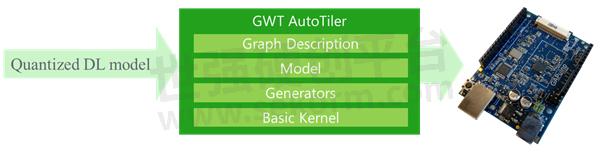

feature channels can be computed by Equation 1 . The result of convolution operation among integer

-bit operands is scaled with per-layer or per-channel factors

after the integer feature-wise bias

addition. Such a discretized model derives from [3].

Equation 1 Convolution Layer Integer Model

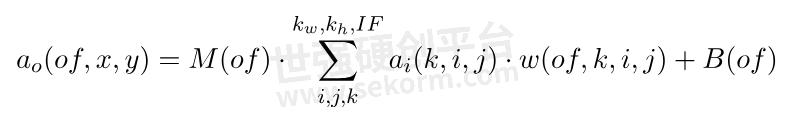

Figure 2 Greenwaves Autotiler Tool

In addition to the 4X/2X memory footprint compression, the quantization of a DL model to 8/16 bit enables the usage of low-bitwidth vector instructions for computing the dot products of Equation 1. In particular, the RISC-V cores of GAP8 come with 2×16 bit and 4x 8-bit SIMD MAC instructions that can be exploited for convolution acceleration. Such optimized instructions are used within a set of software optimized parallel kernels, denoted as Basic Kernels, which implements CNN basic operations and are distributed as part of the GAP8 SDK. These kernels feature low-bit-width operands and assume data residing in shared cluster L1 to gain maximum performance. The functionality of a convolutional network layer can be obtained by grouping together the needed basic kernels. For instance a 3×3 DepthWise layer is composed by KerParConvDWDP3x3Stride1_fps() and KerDPMulBiasScalar_fps() kernels. The first kernel realizes an 8-bit spatial 3×3 depth wise convolution with stride 1 by distributing the workload over multiple cores. The accumulation value, with a bit precision higher than input operands, is scaled by the second call and compressed back to 8 bits.

The GAP Autotiler

Since activation or weight tensors do not typically fit into the L1 memory, an automatic buffering mechanism, denoted as tiling, needs to be implemented by the GAP8 application code. The tiling mechanism transfers data between L3 to L2 and between L2 to L1 memory areas to feed the basic kernel dataflow, and back through the memory hierarchy to store output in memory. To assist programmers, the GAP8 SDK includes the GAP Autotiler tool, that, given the sizes of the convolution layers and the maximum available L1, L2 and L3 memory, automatically determines the optimal tiling strategy. It automatically computes of best size for tensor partitioning, i.e. the tiles of data, that are transferred at any iteration from L2 to L1 and from L3 to L2, and vice versa. The optimal solution is found by minimizing the tiling overhead, measured as the ratio between the total amount of data copied to L1 over the tensor dimension. For any convolution operation, an optimal tiling strategy maximizes data reuse by coping operands to the L1 memory just once, hence the best tiling overhead is equal to 1. It is worth noting that the optimality of the chosen strategy is due to the predictability of the computation dataflow: the GAP Autotiler tool solves the discrete optimization problem by selecting the solution that minimizes the tiling overhead among all the possible solutions.

The GAP Autotiler tool is composed of several building blocks, as depicted in Figure 2. The output of the tool is a C code description of a quantized network graph, which exploits the Basic Kernels mentioned above. The other components will be presented in the next paragraphs, while the quantized input graph can be produced by any DL framework (e.g. Tensorflow or Pytorch).

Generation of Convolutional Network Models

Any DL network can be coded as an Autotiler’s CNN Model, by making use of a set of APIs for the C code generation of CNN layer functions, i.e. the CNN Generators. As an example, the code of the first layer Layer0() of the MobileNetV1 model is generated by calling the Generator:

CNN_ConvolutionMulBiasPoolReLU(“Layer0”, &CtrlH, 1,1,1,1,1,0,0,-7,16,0,1,1,1,0,1,3,32,224,224,KOP_CONV_DP,3,3,1,1,2,2, 1, KOP_NONE, 3,3, 1,1, 2,2, 1, KOP_RELU);

where, besides the convolution parameters, the bit width and quantization of input, weights, output, bias and scaling factor parameters can be individually set. Hence, a GAP Autotiler model can be intuitively described as a sequence of layer generators.

The generator CNN_ConvolutionMulBiasPoolReLU() selects the best basic kernels, determines the tiling strategy and produces the layer C code. The produced code includes the basic kernel calls and the calls to the GAP SDK data transfers APIs that realize the tiling by transferring data from L2 or L3 memory, through generated calls to the DMA and Micro-DMA units. Thanks to this design methodology, the memory management is totally transparent to the user and is overlapped with layer calculation, minimally degrading the performance of the basic kernels.

Graph Generation

The latest release of the GAP8 SDK extends the functionality of the Autotiler tool with a Graph Description input format, aiming at defining the edge connections between the defined nodes, i.e. the layer function generated through the Autotiler’s Generator APIs. Such functionality serves for the generation of the inter-layer glue code to execute the network inference function. The graph is declared and opened by means of the API call CNNGraph_T* CreateGraph (), whose arguments specify the edges of the graph. Nodes are added to the opened graph with the API void AddNode (). For instance, the 21st layer of MobileNetV1 is appended to the graph by means of:

AddNode(“Layer21”,Bindings(5,GNodeArg(GNA_IN, “OutL20”, 0),GNodeArg(GNA_IN, “FL21”, 0),GNodeArg(GNA_IN, “BL21”, 0),GNodeArg(GNA_IN, “ML21”, 0),GNodeArg(GNA_OUT, “OutL21”, 0) ));

where Layer21 is the name of the generated layer function, while the second argument defines the binding between the edges of the graph and the arguments of the node function created by the layer generator described above. In this case, the 5 arguments of the layer function are connected respectively to OutL20, which is the temporary output of the previous layer, and FL21, BL21 and ML21, are the weight, bias and scaling factor parameters defined during the creation of the graph.

After defining the set of nodes and their connectivity, the call CloseGraph() triggers the processing of the CNN Graph. The output of this process are three C functions, a graph constructor and destructor and a graph runner function for running inference tasks with the given graph.

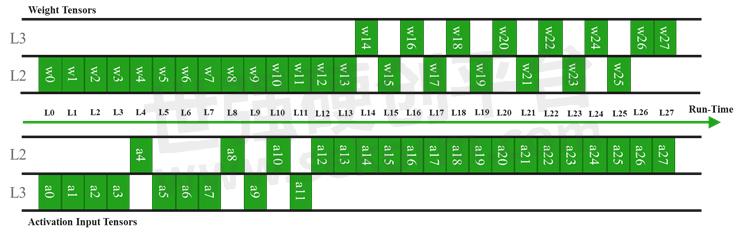

Figure 3 MobileNetV1 tensor allocation for layer-wise execution with memory constraints of 50kB and 350kB for respectively L1 and L2 buffers

When the graph description is provided to the GAP Autotiler, the memory allocation of both activation and weight tensors, i.e. the edges of the graph, is managed based on the provided memory constraints, i.e. the maximum amount of L1, L2 and L3 memory that can be used. Any activation or parameter tensor is allocated, statically or dynamically depending on the nature of the tensor, on the most convenient memory level and can be promoted at runtime to the L2 memory, if enough memory is available. Figure 3illustrates the allocation of both weight and input activation tensors at runtime when the i-th layer Li of MobileNetV1 is about to be executed. The initial layers, which have a low number of parameters but large input and output feature maps, are mostly kept in L3 while weights are promoted to L2 before layer execution. The opposite strategy is visible on the last layers.

Running the application code

Besides the C-code of the inference function MobileNetCNN(), the GAP Autotiler Tool generates the C-code functions MobileNetCNN_Construct() and MobileNetCNN_Destruct() which, respectively, allocate and deallocate the memory for static parameters. A programmer using the generated code simply calls these in their main application code to run inference tasks on the GAP8 cluster.

Training a Quantized Integer-Only MobileNetV1 model for deployment

Coming back to the input of the Autotiler tool, this section deals with network quantization aspects and targets an audience not familiar with this topic.

To gain maximum performance and the highest compression level, any deep network must be quantized to 8 bits and optionally manipulated to make use only of integer arithmetic. To avoid accuracy loss when using 8-bit quantization, a quantization-aware training process was used. Even for energy and memory-optimized network topologies, such as MobileNet V1 or V2, this has been shown not to reduce accuracy. The quantization strategy we are using symmetrically quantizes the weight parameters around zero, after folding batch normalization parameters inside the convolution layer weights. We use PACT [4] to determine the per-tensor quantization range, however other methodologies can also be applied. The PACT technique is also used to learn the dynamic range of the activation values, i.e. the output of the nonlinear functions.

Given the learned symmetric dynamic range with

of any activation or weight tensor, we derive the parameter

based on the number of bits

as done in [3] Hence, a per-layer scaling factor can be computed as

where both

and

are parameters quantized to 8 bits.

By definition, features a fixed-point

format. Given this equation any sub-graph of a fake-quantized model, which starts from the convolution input of the i-th layer and ends with the input of the it1-th convolutional layer, can be approximated with the integer-only Equation 2 without any loss

Equation 2 Integer-only approximation of a network sub-graph

of generality. Within the GAP8 toolset, this transfer function is realized by means of combinations of Basic Kernels.

In the case of MobileNet , the described quantization process leads to a final accuracy of

on ImageNet, only 0.9\% lower than a full-precision model’.

Experimental Result

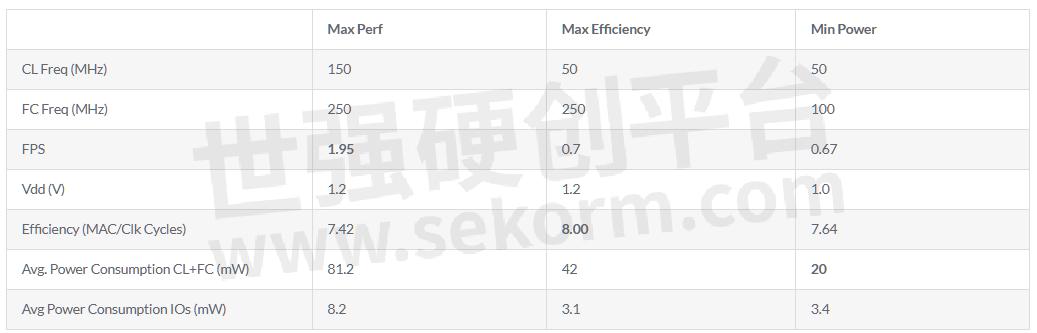

Table 1 reports the measurements on GAP8 when running the largest MobileNetV1 network (input resolution 224 and width multiplier 1.0). The network C code is generated automatically by the GAP Autotiler tool, by constraining the usage of L1 and L2 buffer sizes to, respectively, 52kB and 400kB.

Besides the latency and power measurements of the Fabric Controller (FC) region and Cluster (CL), the compute efficiency is reported in terms of MAC/cycles. We benchmarked three configurations, by varying the voltage and frequency settings:

Max Performance: targeting the fastest inference time.

Max Efficiency: maximizing the MAC/cycles metric.

Min Power: targeting the lowest average power consumption.

We show that performance ranges from nearly 2 FPS under a power consumption around 80mW to 0.67 FPS with power consumption as low as 20 mW. Top efficiency of 8 MAC/cycles can be achieved by increasing the memory bandwidth (FC clock).

Table 1 MobileNetV1 224_1.0 running on GAP8

References

[1] A. G. Howard, M. Zhu, B. Chen, D. Kalenichenko, W. Wang, T. Weyand, M. Andreetto and H. Adam, “MobileNets: Efficient convolutional neural networks for mobile vision applications,” arXiv preprint arXiv:1704.04861, 2017.

[2] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C.-Y. Fu and A. C. Berg, “SSD: Single shot multibox detector,” in European conference on computer vision, 2016.

[3] B. Jacob, S. Kligys, B. Chen, M. Zhu, M. Tang, A. Howard, H. Adam and D. Kalenichenko, “Quantization and training of neural networks for efficient integer-arithmetic-only inference,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018.

[4] J. Choi, Z. Wang, S. Venkataramani, P. I.-J. Chuang, V. Srinivasan and K. Gopalakrishnan, “PACT: Parameterized clipping activation for quantized neural networks,” arXiv preprint arXiv:1805.06085, 2018.

- |

- +1 赞 0

- 收藏

- 评论 0

本文由Ray转载自GREENWAVES Official Website,原文标题为:Which AI models can run at the Very Edge?,本站所有转载文章系出于传递更多信息之目的,且明确注明来源,不希望被转载的媒体或个人可与我们联系,我们将立即进行删除处理。

相关推荐

解密嵌入式多核异构方案,超高算力RK3588多核异构核心板系列一览

DSOM-040R是东胜基于Rockchip RK3588芯片开发的B2B核心板,具备4个A76核处理器,以及4个A55核处理器,东胜对此提供AMP的SDK,其中A76核用于跑Linux 系统,作为整个AMP系统的Master 。

Machine Learning Benchmarks Compare Energy Consumption

MLCommons recently conducted a round of its MLPerf Tiny 1.0 benchmarking, and Silicon Labs submitted its EFR32MG24 Multiprotocol Wireless System on Chip (SoC) for benchmarking. This compact development platform provides a simple, time-saving path for AI/ ML development.

【应用】安信可Ai-WB2模组用于锁联网方案,实现蓝牙配网功能,有效解决易掉线/断网/延迟等问题

优智云家选用安信可科技Ai-WB2模组进行产品设计,并通过SDK开发实现门锁方案的智能化升级。安信可Ai-WB2系列模组具有出色的稳定性与可靠性,协助优智云家的产品实现了蓝牙配网功能,解决了WiFi配网易掉线、易断网、易延迟的痛点问题。

GAP9评估套件(GAP 9_EVK)用户手册

本手册为GreenWaves Technologies的GAP9评估套件(GAP9_EVK)的用户指南。GAP9_EVK是一款针对GAP9芯片的评估和开发套件,GAP9芯片是一款超低功耗的应用处理器,适用于边缘AI和数字信号处理。该套件旨在评估GAP9的能力,并支持多种扩展连接,以便与额外的硬件(如传感器、低功耗无线电模块或音频板)配合使用。手册详细介绍了GAP9_EVK的架构、组件、电源管理、配置跳线设置、编程和调试方法,以及如何使用板上的LED、按钮、麦克风和GPIO扩展器。此外,还提供了关于电流测量的信息和使用技巧。

GREENWAVES - CHIP,超低功耗应用处理器,EVALUATION KIT,评估套件,芯片,ULTRA-LOW POWER APPLICATON PROCESSOR,GAP9

OAX8000汽车视频处理器产品简介

OmniVision的OAX8000是一款专为入门级独立驾驶员监控系统(DMS)优化的AI汽车专用集成电路(ASIC)。它集成了神经网络处理单元(NPU)、图像信号处理器(ISP)和DDR3内存,提供高达1.1万亿次每秒的处理速度。该芯片支持高清视频编码和解码,具有低功耗和高动态范围处理能力。

WILLSEMI - DEDICATED DRIVER MONITORING SYSTEM ASIC,AUTOMOTIVE VIDEO PROCESSOR,ASIC,汽车视频处理器,N.专用集成电路,专用集成电路;专用芯片;澳大利亚证券和投资委员会,支持AI的汽车专用集成电路,AI-ENABLED, AUTOMOTIVE APPLICATION-SPECIFIC INTEGRATED CIRCUIT,专用驾驶员监控系统ASIC,OAX8000-U96G-1A-Z,OAX8000,驾驶员监测系统,DRIVER MONITORING SYSTEM,汽车,舱内监控系统,国际监测系统,DMS,AUTOMOTIVE,IMS,IN-CABIN MONITORING SYSTEM

安信可星闪开源版SDK的环境搭建和新建工程

本次给大家带来安信可星闪开源版SDK的环境搭建和新建工程。我们推荐的开发方式是VsCode+插件的形式,简单好用。(需要有一丢丢动手能力——安装VsCode和部分软件依赖包),开始整活!

基于GAP9的TWS/耳机硬件架构及实现指南

本资料介绍了基于GAP9的TWS耳机和蓝牙连接耳机的硬件架构选项,包括单GAP9和双GAP9配置。资料提供了不同架构的示例,包括块图和实施指南,旨在帮助系统架构师和PCB设计师高效地将GAP9集成到目标耳机产品中。资料涵盖了GAP9与蓝牙SoC之间的接口、音频编解码器配置、传感器连接、功率管理、测试点、电池管理和外部存储等方面。此外,还讨论了GAP9作为纯协处理器的应用,以及与软件兼容性的影响。

GREENWAVES - 处理器,PROCESSOR,GAP9,蓝牙连接耳机,TRUE WIRELESS STEREO EARBUDS,TWS耳塞,BLUETOOTH-CONNECTED HEADPHONES,真无线立体声耳塞,TWS EARBUDS

AI ASR Chip Supporting Off-Line Automatic Speech Recognition, Widely Used in Home Appliances

CI1122 is an AI ASR chip from ChipIntelli that is widely used in home appliances, household appliances, lighting, toys, wearable equipment, automobiles and other products for voice interaction and control. CI1122 has built-in independent intellectual property right owned Brain Neural Network Processor (BNPU), which supports off-line automatic speech recognition.

GreenWaves Technologies Announced Availability of GAP8 Software Development Kit and GAPuino Development Board

GreenWaves’ pioneering GAP8 IoT Application Processor enables high-performing evaluation board and development kit.Grenoble, France and Santa Clara, Calif., May 22, 2018 – GreenWaves Technologies, a fabless semiconductor startup designing disruptive ultra-low power embedded solutions for image, sound and vibration AI processing in sensing devices, today announced the availability of its GAP8 Software Development Kit (SDK) and GAPuino Development Board. The GAPuino Boards are available for purchase here and the GAP8 SDK can be downloaded via GitHub.

GreenWaves Technologies GAP9实现超低功耗边缘计算的新维度

GreenWaves Technologies推出的GAP9处理器旨在满足超低功耗边缘计算需求,适用于能量受限的设备,如物联网传感器、可穿戴设备和耳戴式产品。GAP9采用独特的架构,包括Fabric Controller、µDMA和Cluster,支持动态频率和电压缩放,优化功耗。它提供丰富的接口和内存选项,支持RISC-V指令集,并集成了神经网络加速器。GAP9 SDK包含开发工具和库,支持机器学习和音频应用开发,确保高效、安全的边缘计算体验。

GREENWAVES - 处理器,PROCESSOR,GAP9,WEARABLE DEVICES,耳塞,WRISTBANDS,EARBUDS,穿戴式设备,耳机,超低功耗边缘计算,智能建筑传感器,智能眼镜,ULTRA-LOW POWER EDGE COMPUTING,基于图像的建筑传感器,SMART GLASSES,IMAGE-BASED BUILDING SENSORS,电池供电装置,IOT SENSORS,SMART BUILDING SENSORS,HEARABLE PRODUCTS,可听产品,物联网传感器,HEADSETS,袖口,BATTERY OPERATED DEVICES

【产品】支持语音+触摸创新融合的SPV20系列AI语音识别芯片,精准满足用户交互需求

普林芯驰SPV20系列芯片是一款性能优异的AI语音识别芯片。业内领先具有自主知识产权的芯片架构及核心语言引擎,完备的命令词库、软件SDK,组成了定制化的一站式Turnkey方案,支持语音+触摸的创新融合,精准满足用户实际交互需求。

MeiG Smart 5G Platform Helps Upgrade The Live Broadcast Industry

As the world-leading provider of wireless communication modules and solutions, MeiG Smart took the lead in signing the Snapdragon 690 5G solution license with Qualcomm, and became the first IoT module manufacturer with 5G SoC license. Recently, MeiG has launched the industry‘s first 5G smart module SRM900. Snapdragon 690 is Qualcomm‘s first 5G platform that supports 4K HDR(10 bit) video recording and the latest 5th-generation AI Engine. It adopts the latest CPU architecture which improves its performance by 20%.

GAP9下一代处理器,用于便携式设备和智能传感器

GAP9下一代处理器专为可穿戴设备和智能传感器设计,具备多维计算能力,支持环境感知ANC、基于神经网络的降噪、3D音效、多传感器分析等功能。该处理器在音频处理方面表现出色,具有低功耗、低延迟的特点,适用于可穿戴设备、智能安全系统和智能建筑传感器等领域。

GREENWAVES - EMBEDDED AUDIO PROCESSORS,嵌入式音频处理器,处理器,PROCESSOR,GAP9,非洲国民大会,SPOKEN LANGUAGE UNDERSTANDING,SPEAKER DETECTION,SPEAKER IDENTIFICATION,OCCUPANCY MANAGEMENT,HEARABLES,说话人检测,智能传感器,电池供电的智能安全系统,BATTERY-POWERED SMART SECURITY SYSTEMS,监视系统,VOICE SEPARATION,SMART BUILDING SENSORS,DNN-NR,NOISE REDUCTION,语言清晰度,人脸识别,语音驱动用户界面,占用管理,身临其境的3D音效,CONTEXT-AWARE ANC,ACTIVE NOISE CANCELLATION,SPEECH INTELLIGIBILITY,有源噪声消除,说话人识别,可听见的装置,TRULY WIRELESS STEREO EARBUDS,IMMERSIVE 3D SOUND,智能建筑传感器,FACE DETECTION,HEARABLE DEVICES,SMART SENSORS,降噪,VOICE DRIVEN USER INTERFACES,SURVEILLANCE SYSTEMS,人脸检测,FACE IDENTIFICATION,可听见的,ANC,上下文感知ANC,真正的无线立体声耳塞,口语理解,语音分离

GAPPoc : A Family of GAP8-centric Proof Of Concept boards for Edge AI

Our GAP8 application processor chip is great at analyzing and understanding data from IoT sensors, from the simplest to the most complex, in a very tight power envelope – from a few tens of milliwatt in active mode down to a few microwatts in sleep mode.

OMNIVISION High-Performance Global Shutter Image Sensor and Processor Now Available on NVIDIA Holoscan and NVIDIA Jetson Platforms

OMNIVISION announced that its total camera solution, comprising the OG02B10 color global shutter (GS) image sensor and OAX4000 ASIC image signal processor (ISP), is now verified and available with the NVIDIA Holoscan sensor processing platform ands the NVIDIA Jetson™ platform for edge AI and robotics.

电子商城

现货市场

登录 | 立即注册

提交评论